Investigating and addressing publication and other biases in meta-analysis

INTRODUCTION

Studies that indicate a substantial impact of therapy are more likely than other studies to be published, published in English, referenced by other authors, and published numerous times. As a result, such papers are more likely to be found and included in systematic reviews, introducing bias. Another primary source of bias is the low methodological quality of research included in a systematic review. Small studies are more susceptible to all of these biases than large studies. The more significant the treatment impact required for the results to be necessary, the smaller the research. Bias in a systematic review may be detected by looking for a correlation between the size of the treatment effect and the size of the study; such correlations can be analysed visually and quantitatively.

Graphical methods for detecting bias

i) Funnel plots

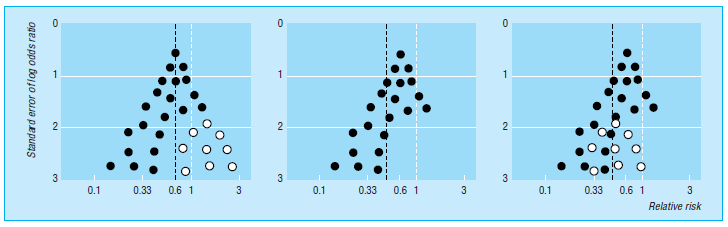

Funnel plots were initially utilised in educational and psychological studies. They’re scattered plots of treatment effects calculated from individual studies (horizontal axis) vs a metric of study size (vertical axis). Because the precision of calculating the underlying treatment effect improves as the sample size of research grows, effect estimates from small studies scatter more widely towards the bottom of the graph, decreasing as the sample size increases. The plot resembles a symmetrical inverted funnel in the absence of bias (fig 1).

Fig 1 Hypothetical funnel plots [1]

Because smaller trials are demonstrating no statistically significant positive impact of the therapy (open circles in fig 1 (left) go unreported, reporting bias results in an asymmetrical funnel plot (fig 1 (centre) with a gap in the bottom correct. The combined impact from metaanalysis overestimates the treatment’s effect in this case. Smaller studies are done and analysed with less methodological rigour on average than more significant research. Thus, asymmetry may arise by overestimating treatment effects in smaller trials with inferior methodology quality (fig 1 right).

Statistical methods for detecting and correcting for bias

Selection models

The selection process that decides whether findings are published is modelled using “selection models,” which are based on the premise that the study’s P value impacts its chance of publication. The methods may be expanded to estimate treatment effects adjusted for estimated publication bias. Still, the lack of strong assumptions about the nature of the selection process necessitates a large number of studies to cover a wide range of P values. According to published applications, a meta-analyses of homoeopathic trials and correction may explain part of the relationship seen in meta-analyses of these research.When publication bias is considered, the “correction” of impact estimates is complex and a source of continuous dispute. The modelling assumptions employed may have a significant impact on the results. Many factors can influence the likelihood of a set of results being published, and it’s difficult, if not impossible, to predict them all correctly. Furthermore, publication bias is simply one of the plausible reasons for treatment-effects-study-size relationships. As a result, it’s best to limit statistical approaches to detecting bias rather than fixing it when modelling selection mechanisms.

Conclusions

Investigators should attempt to locate all published research and seek unpublished material when conducting a systematic review and meta-analysis. The quality of component studies should be scrutinised as well. Selection models for publication bias are most likely to be useful in sensitivity studies examining a meta-analysis’s resilience to potential publication bias. In most meta-analyses, funnel plots should be utilised to offer a visual assessment of whether treatment effect estimates are related to study size. Statistical approaches might be used to investigate the evidence for funnel plot asymmetry and alternative reasons for study heterogeneity. However, these techniques are restricted, especially in meta-analyses based on a small number of small research.

The findings of such meta-analyses should always be taken with a grain of salt. Combining data from fresh trials statistically with a body of faulty research does not eliminate bias. When a systematic review shows that the evidence to date is unreliable for one or more of the reasons described above, there is presently no agreement to guide clinical practice or future research.

Reference

[1] Sterne, Jonathan AC, Matthias Egger, and George Davey Smith. “Investigating and dealing with publication and other biases in meta-analysis.” BMJ 323, no. 7304 (2001): 101-105.

[2] Page, MJ, Sterne, JAC, Higgins, JPT, Egger, M. Investigating and dealing with publication bias and other reporting biases in meta-analyses of health research: A review. Res Syn Meth. 2021; 12: 248– 259. https://doi.org/10.1002/jrsm.1468.

Previous Post

Previous Post